Appearance

Self-hosted LLM

A Self-Hosted LLM (Large Language Model) allows organizations to deploy and manage powerful language models on their own infrastructure. This setup provides full control over the data, security, and customization of the AI models, making it an ideal solution for enterprises requiring privacy, scalability, and tailored functionalities.

Unlike cloud-hosted alternatives, a self-hosted LLM ensures that all data remains within the organization's network, which is crucial for industries with strict data compliance requirements.

Key Features of Self-hosted LLM:

- Data Privacy and Security: Maintain full control over data by hosting the LLM on your own servers, avoiding exposure to third-party clouds.

- Customization: Tailor models with proprietary data, integrate with internal tools, and adjust parameters to fit business needs.

- Scalability: Scale deployments from small projects to large enterprises using existing infrastructure.

- Cost Efficiency: Reduce costs by leveraging current hardware and avoiding recurring cloud fees.

- Compliance and Control: Ensure adherence to industry regulations and data governance policies.

- Performance Optimization: Optimize resource allocation and infrastructure for specific computational demands.

- Integration: Smoothly connect the LLM with existing systems, databases, and workflows.

- Offline Capability: Operate without internet connectivity, ideal for secure or limited-connectivity environments.

Further information

- Read more detailed information on completions endpoints here.

- Read more detailed information on chat endpoints here.

- Read more detailed information on LLMs here.

Actions:

- Send prompt: Sends a prompt.

- Send chat prompt: Sends a chat prompt.

Which model can I use with Workflow Automation?

Any OpenAI-compatible model can be used as long as the credentials—URL, Secret Key, and V1 Models—match. The endpoint must be publicly accessible.

How does it work with the different Workflow Automation plans?

- For the Basic plan, the endpoint must be publicly accessible.

- For the Pro plan, the endpoint must be accessible via VPN or publicly.

- For the Self-hosted plan, the endpoint must be accessible from the deployment environment.

Further information

- Read more detailed information on completions endpoints here.

- Read more detailed information on chat endpoints here.

Connect with Self-hosted LLM:

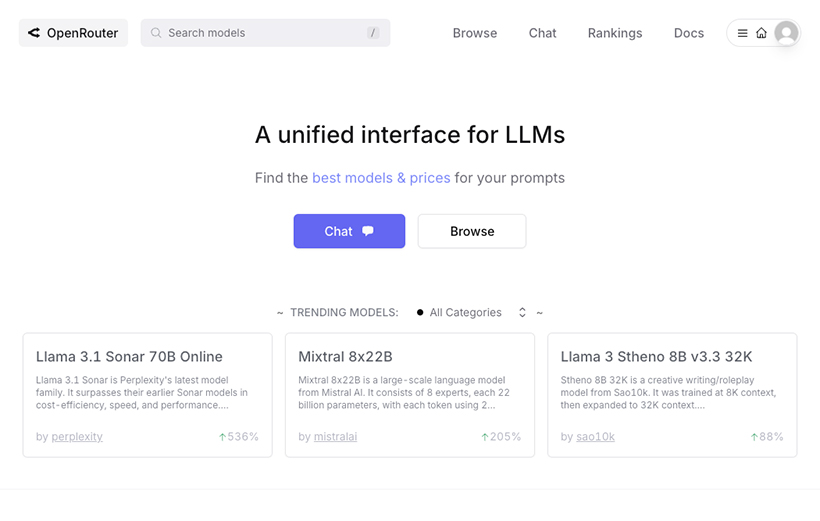

Log in to your openrouter.ai account.

INFO

Alternatively, you could also use another AI platform that provides access to OpenAI-compatible large language models through a unified API.

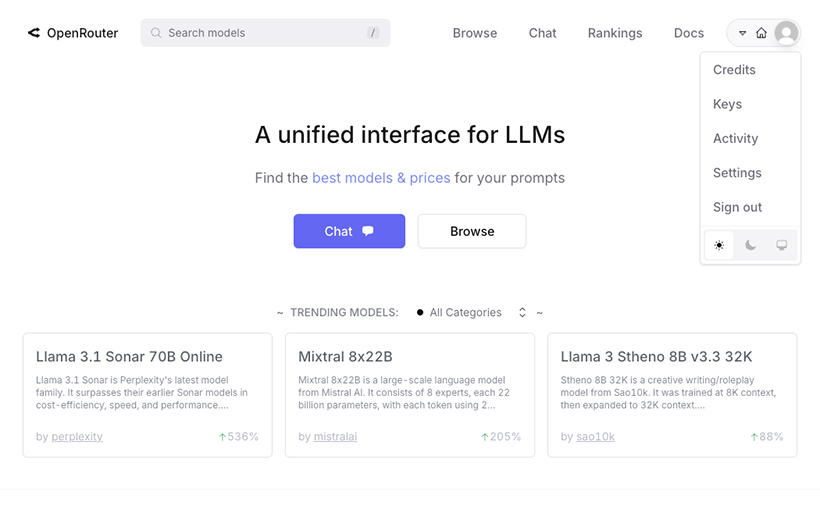

Click the User icon in the top right corner and navigate to Keys.

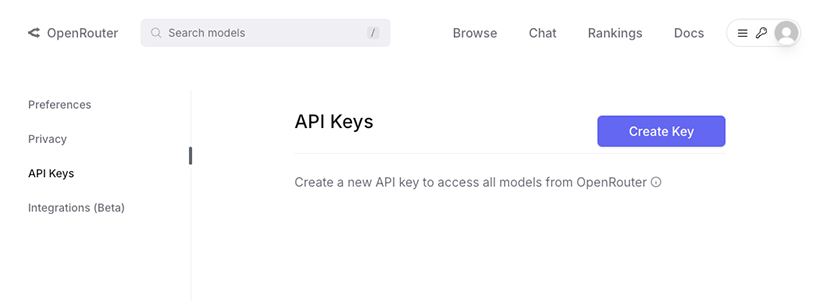

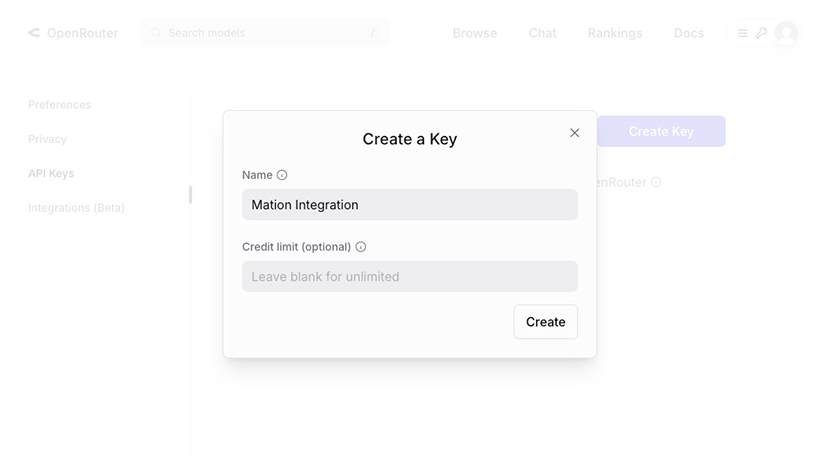

Click the Create Key button.

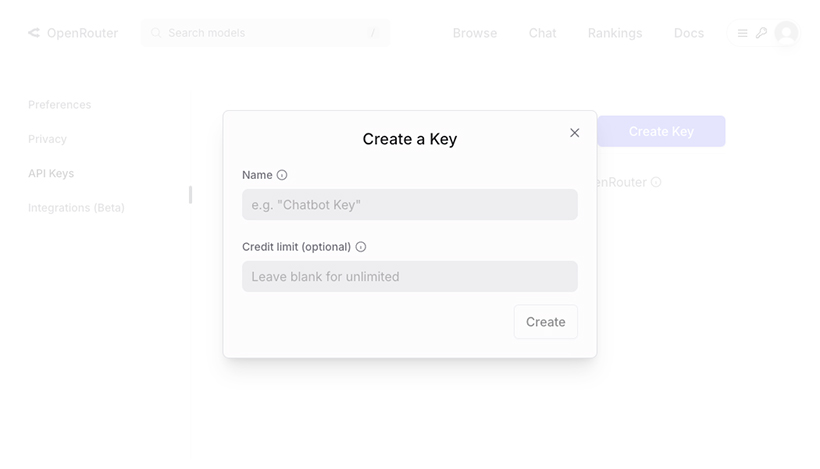

A pop-up opens.

Enter a Name for your API Key. Optionally, you may enter a Credit limit. Click the Create button.

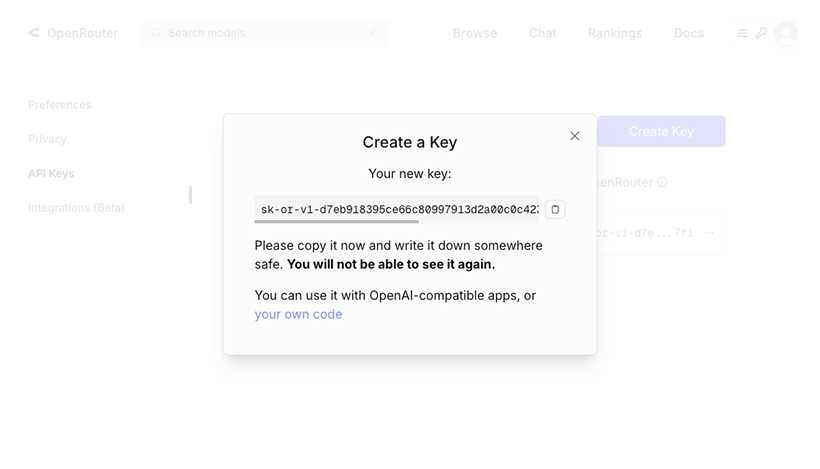

Your new

Api Keyis revealed.WARNING

Make sure to copy and save your

API Keynow in a secure location for later use, since you will not be able to see it again.

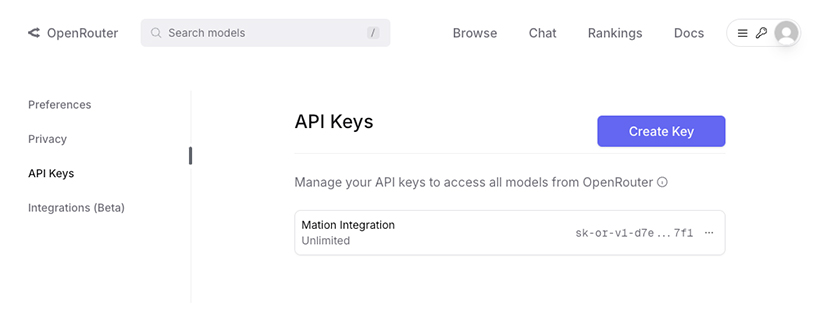

Close the pop-up window to access the API Key overview page.

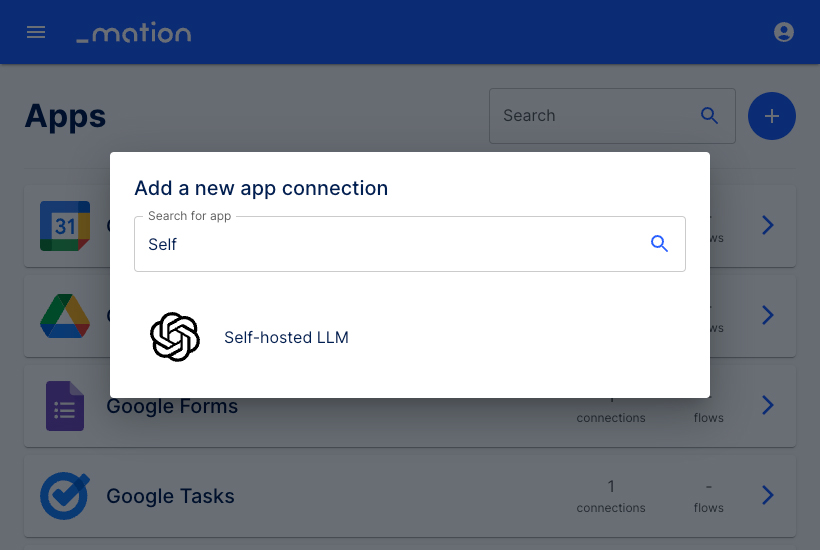

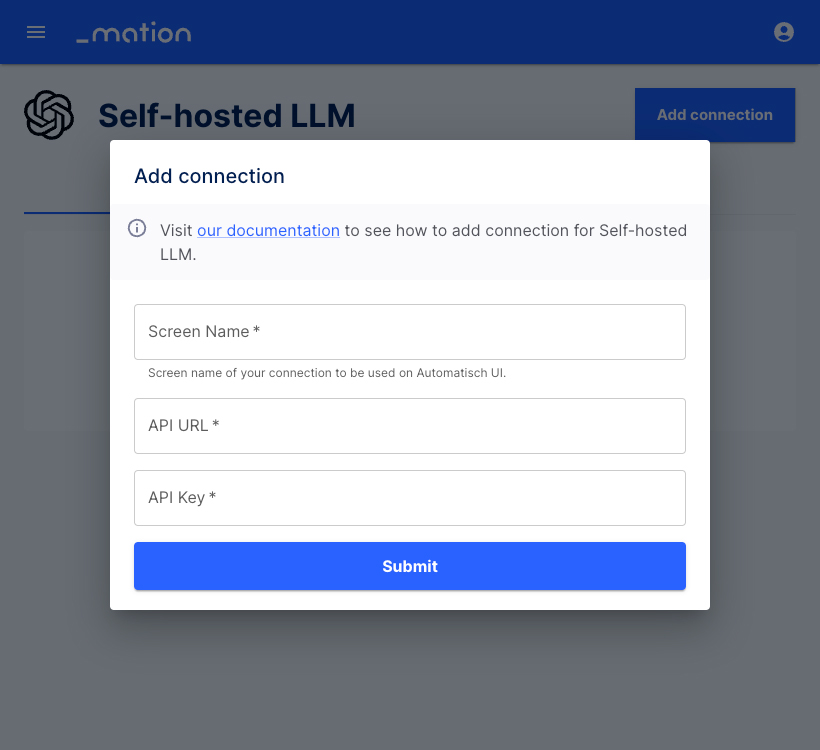

Go to Workflow Automation and navigate to Apps. Click the + Add Connection button. In the popup, select Self-hosted LLM from the list.

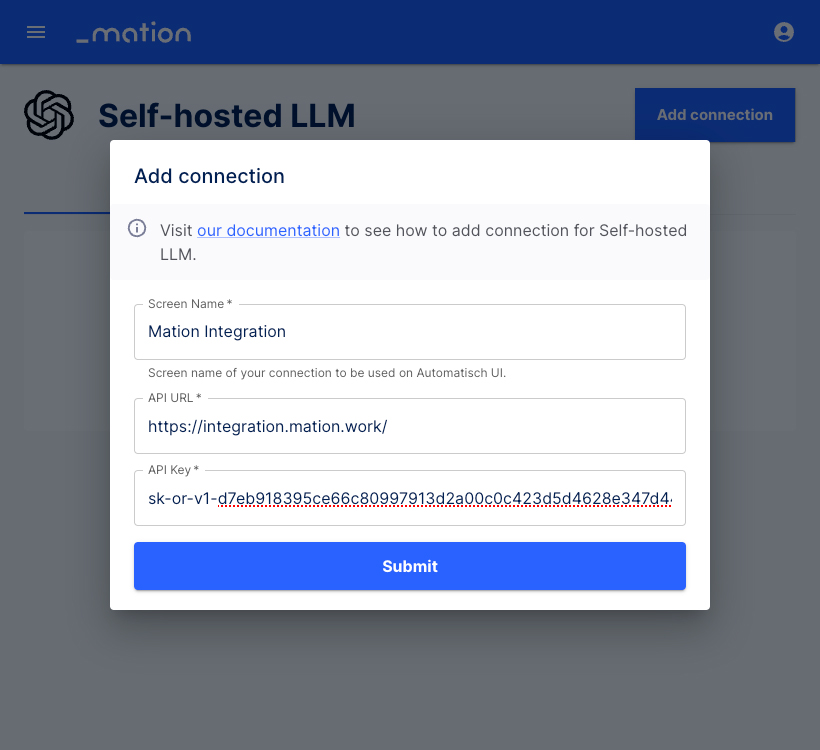

Enter a Screen Name.

Paste your Workflow Automation

application instance URLinto the API URL field.Paste the

API Keyyou saved earlier into the API Key field.

Click the Submit button.

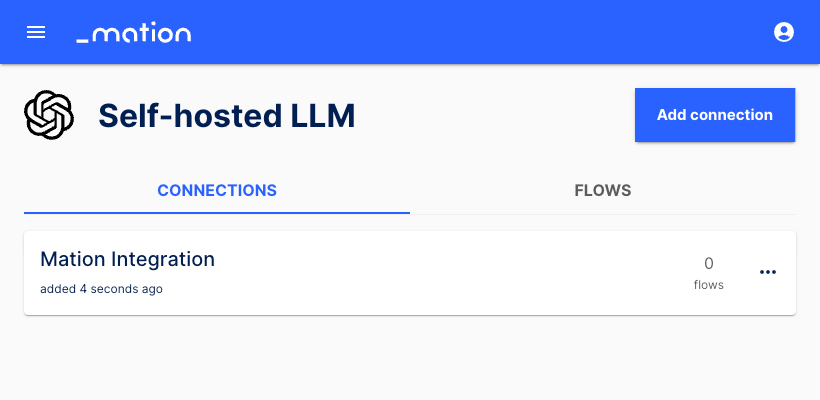

Your Self-hosted LLM connection is now established.

Start using your new Self-hosted LLM connection with Workflow Automation.